#-Python Web Crawler Development

Explore tagged Tumblr posts

Text

Advanced Python Training: Master High-Level Programming with Softcrayons

Advanced python training | Advanced python course | Advanced python training institute

In today's tech-driven world, knowing Python has become critical for students and professionals in data science, AI, machine learning, web development, and automation. While fundamental Python offers a strong foundation, true mastery comes from diving deeper into complex concepts. That’s where Advanced Python training at Softcrayons Tech Solution plays a vital role. Whether you're a Python beginner looking to level up or a developer seeking specialized expertise, our advanced Python training in Noida, Ghaziabad, and Delhi NCR offers the perfect path to mastering high-level Python programming.

Why Advance Python Training Is Essential in 2025

Python continues to rule the programming world due to its flexibility and ease of use. However, fundamental knowledge is no longer sufficient in today’s competitive business landscape. Companies are actively seeking professionals who can apply advanced Python principles in real-world scenarios. This is where Advanced python training becomes essential—equipping learners with the practical skills and deep understanding needed to meet modern industry demands.

Our Advanced Python Training Course is tailored to make you job-ready. It’s ideal for professionals aiming to:

Build scalable applications

Automate complex tasks

Work with databases and APIs

Dive into data analysis and visualization

Develop back-end logic for web and AI-based platforms

This course covers high-level features, real-world projects, and practical coding experience that employers demand.

Why Choose Softcrayons for Advanced Python Training?

Softcrayons Tech Solution is one of the best IT training institutes in Delhi NCR, with a proven track record in delivering job-oriented, industry-relevant courses. Here’s what sets our Advanced Python Training apart:

Expert Trainers

Learn from certified Python experts with years of industry experience. Our mentors not only teach you advanced syntax but also guide you through practical use cases and problem-solving strategies.

Real-Time Projects

Gain hands-on experience with live projects in automation, web scraping, data manipulation, GUI development, and more. This practical exposure is what makes our students stand out in interviews and job roles.

Placement Assistance

We provide 100% placement support through mock interviews, resume building, and company tie-ups. Many of our learners are now working with top MNCs across India.

Flexible Learning Modes

Choose from online classes, offline sessions in Noida/Ghaziabad, or hybrid learning formats, all designed to suit your schedule.

Course Highlights of Advanced Python Training

Our course is structured to provide a comprehensive learning path from intermediate to advanced level. Some of the major modules include:

Object-Oriented Programming (OOP)

Understand the principles of OOP including classes, inheritance, polymorphism, encapsulation, and abstraction. Apply these to real-world applications to write clean, scalable code.

File Handling & Exception Management

Learn how to manage files effectively and handle different types of errors using try-except blocks, custom exceptions, and best practices in debugging.

Iterators & Generators

Master the use of Python’s built-in iterators and create your own generators for memory-efficient coding.

Decorators & Lambda Functions

Explore advanced function concepts like decorators, closures, and anonymous functions that allow for concise and dynamic code writing.

Working with Modules & Packages

Understand how to build and manage large-scale projects with custom packages, modules, and Python libraries.

Database Connectivity

Connect Python with MySQL, SQLite, and other databases. Perform CRUD operations and work with data using Python’s DB-API.

Web Scraping with BeautifulSoup & Requests

Build web crawlers to extract data from websites using real-time scraping techniques.

Introduction to Frameworks

Get a basic introduction to popular frameworks like Django and Flask to understand how Python powers modern web development.

Who Can Join Advanced Python Training?

This course is ideal for:

IT graduates or B.Tech/MCA students

Working professionals in software development

Aspirants of data science, automation, or AI

Anyone with basic Python knowledge seeking specialization

Prerequisite: Basic understanding of Python programming. If you're new, we recommend starting with our Beginner Python Course before moving to advanced topics.

Tools & Technologies Covered

Throughout the Advance Python Training at Softcrayons, you will gain hands-on experience with:

Python 3.x

PyCharm / VS Code

Git & GitHub

MySQL / SQLite

Jupyter Notebook

Web scraping libraries (BeautifulSoup, Requests)

JSON, API Integration

Virtual environments and pip

Career Opportunities After Advanced Python Training

After completing this course, you will be equipped to take up roles such as:

Python Developer

Data Analyst

Automation Engineer

Backend Developer

Web Scraping Specialist

API Developer

AI/ML Engineer (with additional learning)

Python is among the top-paying programming languages today. With the right skills, you can easily earn a starting salary of ₹4–7 LPA, which can rise significantly with experience and expertise.

Certification & Project Evaluation

Softcrayons Tech Solution will provide you with a globally recognized Advance Python Training certificate once you complete the course. In addition, your performance in capstone projects and assignments will be assessed to ensure that you are industry ready.

Final Words

Python is more than simply a beginner's language; it's an effective tool for developing complex software solutions. Enrolling in the platform's Advanced python training course is more than simply studying; it is also preparing you for a job in high demand and growth.Take the next step to becoming a Python master. Join Softcrayons today to turn your potential into performance. Contact us

0 notes

Text

Scrape Product Info, Images & Brand Data from E-commerce | Actowiz

Introduction

In today’s data-driven world, e-commerce product data scraping is a game-changer for businesses looking to stay competitive. Whether you're tracking prices, analyzing trends, or launching a comparison engine, access to clean and structured product data is essential. This article explores how Actowiz Solutions helps businesses scrape product information, images, and brand details from e-commerce websites with precision, scalability, and compliance.

Why Scraping E-commerce Product Data Matters

E-commerce platforms like Amazon, Walmart, Flipkart, and eBay host millions of products. For retailers, manufacturers, market analysts, and entrepreneurs, having access to this massive product data offers several advantages:

- Price Monitoring: Track competitors’ prices and adjust your pricing strategy in real-time.

- Product Intelligence: Gain insights into product listings, specs, availability, and user reviews.

- Brand Visibility: Analyze how different brands are performing across marketplaces.

- Trend Forecasting: Identify emerging products and customer preferences early.

- Catalog Management: Automate and update your own product listings with accurate data.

With Actowiz Solutions’ eCommerce data scraping services, companies can harness these insights at scale, enabling smarter decision-making across departments.

What Product Data Can Be Scraped?

When scraping an e-commerce website, here are the common data fields that can be extracted:

✅ Product Information

Product name/title

Description

Category hierarchy

Product specifications

SKU/Item ID

Price (Original/Discounted)

Availability/Stock status

Ratings & reviews

✅ Product Images

Thumbnail URLs

High-resolution images

Zoom-in versions

Alternate views or angle shots

✅ Brand Details

Brand name

Brand logo (if available)

Brand-specific product pages

Brand popularity metrics (ratings, number of listings)

By extracting this data from platforms like Amazon, Walmart, Target, Flipkart, Shopee, AliExpress, and more, Actowiz Solutions helps clients optimize product strategy and boost performance.

Challenges of Scraping E-commerce Sites

While the idea of gathering product data sounds simple, it presents several technical challenges:

Dynamic Content: Many e-commerce platforms load content using JavaScript or AJAX.

Anti-bot Mechanisms: Rate-limiting, captchas, IP blocking, and login requirements are common.

Frequent Layout Changes: E-commerce sites frequently update their front-end structure.

Pagination & Infinite Scroll: Handling product listings across pages requires precise navigation.

Image Extraction: Downloading, renaming, and storing image files efficiently can be resource-intensive.

To overcome these challenges, Actowiz Solutions utilizes advanced scraping infrastructure and intelligent algorithms to ensure high accuracy and efficiency.

Step-by-Step: How Actowiz Solutions Scrapes E-commerce Product Data

Let’s walk through the process that Actowiz Solutions follows to scrape and deliver clean, structured, and actionable e-commerce data:

1. Define Requirements

The first step involves understanding the client’s specific data needs:

Target websites

Product categories

Required data fields

Update frequency (daily, weekly, real-time)

Preferred data delivery formats (CSV, JSON, API)

2. Website Analysis & Strategy Design

Our technical team audits the website’s structure, dynamic loading patterns, pagination system, and anti-bot defenses to design a customized scraping strategy.

3. Crawler Development

We create dedicated web crawlers or bots using tools like Python, Scrapy, Playwright, or Puppeteer to extract product listings, details, and associated metadata.

4. Image Scraping & Storage

Our bots download product images, assign them appropriate filenames (using SKU or product title), and store them in cloud storage like AWS S3 or GDrive. Image URLs can also be returned in the dataset.

5. Brand Attribution

Products are mapped to brand names by parsing brand tags, logos, and using NLP-based classification. This helps clients build brand-level dashboards.

6. Data Cleansing & Validation

We apply validation rules, deduplication, and anomaly detection to ensure only accurate and up-to-date data is delivered.

7. Data Delivery

Data can be delivered via:

REST APIs

S3 buckets or FTP

Google Sheets/Excel

Dashboard integration

All data is made ready for ingestion into CRMs, ERPs, or BI tools.

Supported E-Commerce Platforms

Actowiz Solutions supports product data scraping from a wide range of international and regional e-commerce websites, including:

Amazon

Walmart

Target

eBay

AliExpress

Flipkart

BigCommerce

Magento

Rakuten

Etsy

Lazada

Wayfair

JD.com

Shopify-powered sites

Whether you're focused on electronics, fashion, grocery, automotive, or home décor, Actowiz can help you extract relevant product and brand data with precision.

Use Cases: How Businesses Use Scraped Product Data

Retailers

Compare prices across platforms to remain competitive and win the buy-box.

🧾 Price Aggregators

Fuel price comparison engines with fresh, accurate product listings.

📈 Market Analysts

Study trends across product categories and brands.

🎯 Brands

Monitor third-party sellers, counterfeit listings, or unauthorized resellers.

🛒 E-commerce Startups

Build initial catalogs quickly by extracting competitor data.

📦 Inventory Managers

Sync product stock and images with supplier portals.

Actowiz Solutions tailors the scraping strategy according to the use case and delivers the highest ROI on data investment.

Benefits of Choosing Actowiz Solutions

✅ Scalable Infrastructure

Scrape millions of products across multiple websites simultaneously.

✅ IP Rotation & Anti-Bot Handling

Bypass captchas, rate-limiting, and geolocation barriers with smart proxies and user-agent rotation.

✅ Near Real-Time Updates

Get fresh data updated daily or in real-time via APIs.

✅ Customization & Flexibility

Select your data points, target pages, and preferred delivery formats.

✅ Compliance-First Approach

We follow strict guidelines and ensure scraping methods respect site policies and data usage norms.

Security and Legal Considerations

Actowiz Solutions emphasizes ethical scraping practices and ensures compliance with data protection laws such as GDPR, CCPA, and local regulations. Additionally:

Only publicly available data is extracted.

No login-restricted or paywalled content is accessed without consent.

Clients are guided on proper usage and legal responsibility for the scraped data.

Frequently Asked Questions

❓ Can I scrape product images in high resolution?

Yes. Actowiz Solutions can extract multiple image formats, including zoomable HD product images and thumbnails.

❓ How frequently can data be updated?

Depending on the platform, we support real-time, hourly, daily, or weekly updates.

❓ Can I scrape multiple marketplaces at once?

Absolutely. We can design multi-site crawlers that collect and consolidate product data across platforms.

❓ Is scraped data compatible with Shopify or WooCommerce?

Yes, we can deliver plug-and-play formats for Shopify, Magento, WooCommerce, and more.

❓ What if a website structure changes?

We monitor site changes proactively and update crawlers to ensure uninterrupted data flow.

Final Thoughts

Scraping product data from e-commerce websites unlocks a new layer of market intelligence that fuels decision-making, automation, and competitive strategy. Whether it’s tracking competitor pricing, enriching your product catalog, or analyzing brand visibility — the possibilities are endless.

Actowiz Solutions brings deep expertise, powerful infrastructure, and a client-centric approach to help businesses extract product info, images, and brand data from e-commerce platforms effortlessly. Learn More

0 notes

Text

Unlocking the Web: How to Use an AI Agent for Web Scraping Effectively

In this age of big data, information has become the most powerful thing. However, accessing and organizing this data, particularly from the web, is not an easy feat. This is the point where AI agents step in. Automating the process of extracting valuable data from web pages, AI agents are changing the way businesses operate and developers, researchers as well as marketers.

In this blog, we’ll explore how you can use an AI agent for web scraping, what benefits it brings, the technologies behind it, and how you can build or invest in the best AI agent for web scraping for your unique needs. We’ll also look at how Custom AI Agent Development is reshaping how companies access data at scale.

What is Web Scraping?

Web scraping is a method of obtaining details from sites. It is used in a range of purposes, including price monitoring and lead generation market research, sentiment analysis and academic research. In the past web scraping was performed with scripting languages such as Python (with libraries like BeautifulSoup or Selenium) however, they require constant maintenance and are often limited in terms of scale and ability to adapt.

What is an AI Agent?

AI agents are intelligent software system that can be capable of making decisions and executing jobs on behalf of you. In the case of scraping websites, AI agents use machine learning, NLP (Natural Language Processing) and automated methods to navigate websites in a way that is intelligent and extract structured data and adjust to changes in the layout of websites and algorithms.

In contrast to crawlers or basic bots however, an AI agent doesn’t simply scrape in a blind manner; it comprehends the context of its actions, changes its behavior and grows with time.

Why Use an AI Agent for Web Scraping?

1. Adaptability

Websites can change regularly. Scrapers that are traditional break when the structure is changed. AI agents utilize pattern recognition and contextual awareness to adjust as they go along.

2. Scalability

AI agents are able to manage thousands or even hundreds of pages simultaneously due to their ability to make decisions automatically as well as cloud-based implementation.

3. Data Accuracy

AI improves the accuracy of data scraped in the process of filtering noise recognizing human language and confirming the results.

4. Reduced Maintenance

Because AI agents are able to learn and change and adapt, they eliminate the need for continuous manual updates to scrape scripts.

Best AI Agent for Web Scraping: What to Look For

If you’re searching for the best AI agent for web scraping. Here are the most important aspects to look out for:

NLP Capabilities for reading and interpreting text that is not structured.

Visual Recognition to interpret layouts of web pages or dynamic material.

Automation Tools: To simulate user interactions (clicks, scrolls, etc.)

Scheduling and Monitoring built-in tools that manage and automate scraping processes.

API integration You can directly send scraped data to your database or application.

Error Handling and Retries Intelligent fallback mechanisms that can help recover from sessions that are broken or access denied.

Custom AI Agent Development: Tailored to Your Needs

Though off-the-shelf AI agents can meet essential needs, Custom AI Agent Development is vital for businesses which require:

Custom-designed logic or workflows for data collection

Conformity with specific data policies or the lawful requirements

Integration with dashboards or internal tools

Competitive advantage via more efficient data gathering

At Xcelore, we specialize in AI Agent Development tailored for web scraping. Whether you’re monitoring market trends, aggregating news, or extracting leads, we build solutions that scale with your business needs.

How to Build Your Own AI Agent for Web Scraping

If you’re a tech-savvy person and want to create the AI you want to use Here’s a basic outline of the process:

Step 1: Define Your Objective

Be aware of the exact information you need, and the which sites. This is the basis for your design and toolset.

Step 2: Select Your Tools

Frameworks and tools that are popular include:

Python using libraries such as Scrapy, BeautifulSoup, and Selenium

Playwright or Puppeteer to automatize the browser

OpenAI and HuggingFace APIs for NLP and decision-making

Cloud Platforms such as AWS, Azure, or Google Cloud to increase their capacity

Step 3: Train Your Agent

Provide your agent with examples of structured as compared to. non-structured information. Machine learning can help it identify patterns and to extract pertinent information.

Step 4: Deploy and Monitor

You can run your AI agent according to a set schedule. Use alerting, logging, and dashboards to check the agent’s performance and guarantee accuracy of data.

Step 5: Optimize and Iterate

The AI agent you use should change. Make use of feedback loops as well as machine learning retraining in order to improve its reliability and accuracy as time passes.

Compliance and Ethics

Web scraping has ethical and legal issues. Be sure that your AI agent

Respects robots.txt rules

Avoid scraping copyrighted or personal content. Avoid scraping copyrighted or personal

Meets international and local regulations on data privacy

At Xcelore We integrate compliance into each AI Agent development project we manage.

Real-World Use Cases

E-commerce Price tracking across competitors’ websites

Finance Collecting news about stocks and financial statements

Recruitment extracting job postings and resumes

Travel Monitor hotel and flight prices

Academic Research: Data collection at a large scale to analyze

In all of these situations an intelligent and robust AI agent could turn the hours of manual data collection into a more efficient and scalable process.

Why Choose Xcelore for AI Agent Development?

At Xcelore, we bring together deep expertise in automation, data science, and software engineering to deliver powerful, scalable AI Agent Development Services. Whether you need a quick deployment or a fully custom AI agent development project tailored to your business goals, we’ve got you covered.

We can help:

Find scraping opportunities and devise strategies

Create and design AI agents that adapt to your demands

Maintain compliance and ensure data integrity

Transform unstructured web data into valuable insights

Final Thoughts

Making use of an AI agent for web scraping isn’t just an option for technical reasons, it’s now an advantage strategic. From better insights to more efficient automation, the advantages are immense. If you’re looking to build your own AI agent or or invest in the best AI agent for web scraping.The key is in a well-planned strategy and skilled execution.

Are you ready to unlock the internet by leveraging intelligent automation?

Contact Xcelore today to get started with your custom AI agent development journey.

#ai agent development services#AI Agent Development#AI agent for web scraping#build your own AI agent

0 notes

Text

What each programming language can do?

Many people always start learning programming by searching for resources on the Internet after their brains are hot. When I started learning, what I was learning wasn’t what I liked the most, and it seemed like I liked another technology more.

Before we learn a programming language, we have to figure out what areas it applies to. This article describes the application areas of some programming languages and sees what they can do.

1. C language C’s main application areas are operating systems, embedded systems, and servers, and it is a powerful high-level language that is widely used underneath. For example, Microsoft’s Windows system, which accounts for over 90% of the world’s operating systems, is written in C at its core. However, the C language is notoriously difficult to learn, and if you are familiar with the C language, the income is not low.

2. Java Java is mainly used for enterprise-level application development, website platform development, mobile games and Android development, for example, many trading sites (Taobao, Tmall, etc.) use Java to develop. Java is easier to learn than C. Java is currently the language with the most job opportunities on the market, as well as the most competitive.

3. Python Python’s main application areas are crawlers, data analysis, and machine learning, and it is used by some small and medium-sized enterprises for back-end development, making it a relatively easy-to-learn language with high development efficiency. However, software companies’ product development lacks efficient and complete development workflow software support.

4. C++ C++ is mainly used in the game field, office software, graphics processing, websites, search engines, relational databases, browsers, software development, integrated environment IDE, etc. C++ has the second largest number of job openings after Java, and the learning difficulty level is not low. Let’s take a look at some of the best game engines written in C++.

5. JavaScript JavaScript used to be mainly used for front-end development and has established a strong position in the front-end of the web, but now JavaScript is not only used in the front-end, but also in his Node.js on the back-end. . It can be said that learning JavaScript is essential for front-end and back-end development.

0 notes

Text

Tips for Hiring the Best Python Developers

Hiring expert Python developers is essential to build scalable, efficient business applications. The process of hiring Python developers involves understanding the project requirements. Python developers need to have a comprehensive understanding of the language’s ecosystem, including its libraries and frameworks, deep knowledge of Python core concepts, tools like MongoDB for database management, and automation to streamline development processes. Evaluating developers based on their technical skills and experience contributes to team dynamics and project goals needed to deliver high-quality, efficient solutions that meet project requirements.

If you want to hire the best Python developers, here are the key elements that can help you:

Explain the requirements in detail:

Define your project, outlining deliverables, goals, and technical specifications.

Core Python Proficiency:

This includes a strong grasp of object-relational mapping, data structures, and the nuances of software development and design.

Solve complex programming challenges:

Proficient Python developers are adept at optimizing code for performance and scalability. They implement efficient algorithms with clean, maintainable code that enhance the functionality and user experience of the applications they develop.

Python frameworks:

Frameworks like Django facilitate rapid Python development by providing reusable modules and patterns, significantly reducing development time and increasing project efficiency.

Python Libraries:

Python's extensive libraries emphasize a developer's expertise in manipulating data and building machine learning models.

Dataset Management:

Hire Python developers proficient in creating a business application that can process and analyze large datasets, providing valuable insights and driving innovation.

Technical Skills:

Evaluate the developers' skills in core Python, frameworks, libraries, front-end development, and version control.

Experience:

Discuss targeted questions to assess technical knowledge, soft skills, and experience in handling complex projects.

Tech Prastish is a Python development service company offering end-to-end Python development services, including designing web crawlers for collecting information, developing feature-rich web apps, building data analytic tools, and much more.

Python’s versatility and broad range of applications make it a top choice for businesses looking to innovate. If you’re ready to find the perfect Python developer, look no further than Tech Prastish. With access to a top-tier talent pool, you’ll be well on your way to success. We can help you connect with developers who fit your specific needs, ensuring your project is in expert hands. Get started today with Tech Prastish and take your development to the next level.

Also Read:

How To Create Custom Sections In Shopify

How to use Laravel Tinker

‘PixelPhoto’: Setup Your Social Platform

Custom Post Type in WordPress Without a Plugin

0 notes

Text

```markdown

Scrapy for SEO

In the world of search engine optimization (SEO), every bit of data counts. Understanding how search engines crawl and index web pages is crucial for optimizing a website's visibility. One tool that has gained significant traction among SEO professionals and developers alike is Scrapy. This powerful framework not only helps in scraping websites but also plays a pivotal role in enhancing SEO strategies.

What is Scrapy?

Scrapy is an open-source and collaborative framework for extracting data from websites. It is built using Python, making it highly flexible and easy to use for those familiar with the language. The framework allows users to create spiders—scripts that navigate through websites and extract specific data based on defined rules.

How Does Scrapy Help in SEO?

1. Competitor Analysis: Scrapy can be used to scrape competitor websites, gathering data on their content, keywords, backlinks, and more. This information is invaluable for understanding what works well in your niche and identifying areas where you can improve.

2. Content Audit: Regularly auditing your own website’s content is essential for maintaining high-quality standards. Scrapy can help automate this process by extracting all the text, images, and links from your site, allowing you to analyze them for relevance, keyword density, and other SEO metrics.

3. Indexing Issues: Sometimes, search engines may have trouble indexing certain parts of your website. By using Scrapy to simulate a crawler, you can identify these issues and make necessary adjustments to ensure that all your content is easily accessible to search engines.

4. Real-Time Data: SEO is a dynamic field, and staying updated with the latest trends and changes is crucial. Scrapy can help you gather real-time data from various sources, providing insights into trending topics, popular keywords, and emerging patterns in user behavior.

Conclusion

While Scrapy is primarily known as a web scraping tool, its applications extend far beyond just data extraction. In the realm of SEO, it offers a robust solution for automating tasks, gaining competitive intelligence, and ensuring that your website remains optimized for search engines. As you explore the capabilities of Scrapy, consider how it can be integrated into your SEO strategy to drive better results.

What are some ways you've used Scrapy in your SEO efforts? Share your experiences and insights in the comments below!

```

加飞机@yuantou2048

負面刪除

ETPU Machine

0 notes

Text

Fighting Cloudflare 2025 Risk Control: Disassembly of JA4 Fingerprint Disguise Technology of Dynamic Residential Proxy

Today in 2025, with the growing demand for web crawler technology and data capture, the risk control systems of major websites are also constantly upgrading. Among them, Cloudflare, as an industry-leading security service provider, has a particularly powerful risk control system. In order to effectively fight Cloudflare's 2025 risk control mechanism, dynamic residential proxy combined with JA4 fingerprint disguise technology has become the preferred strategy for many crawler developers. This article will disassemble the implementation principle and application method of this technology in detail.

Overview of Cloudflare 2025 Risk Control Mechanism

Cloudflare's risk control system uses a series of complex algorithms and rules to identify and block potential malicious requests. These requests may include automated crawlers, DDoS attacks, malware propagation, etc. In order to deal with these threats, Cloudflare continues to update its risk control strategies, including but not limited to IP blocking, behavioral analysis, TLS fingerprint detection, etc. Among them, TLS fingerprint detection is one of the important means for Cloudflare to identify abnormal requests.

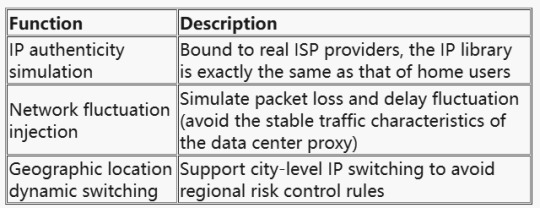

Technical Positioning of Dynamic Residential Proxy

The value of Dynamic Residential Proxy has been upgraded from "IP anonymity" to full-link environment simulation. Its core capabilities include:

JA4 fingerprint camouflage technology dismantling

1. JA4 fingerprint generation logic

Cloudflare JA4 fingerprint generates a unique identifier by hashing the TLS handshake features. Key parameters include:

TLS version: TLS 1.3 is mandatory (version 1.2 and below will be eliminated in 2025);

Cipher suite order: browser default suite priority (such as TLS_AES_256_GCM_SHA384 takes precedence over TLS_CHACHA20_POLY1305_SHA256);

Extended field camouflage: SNI(Server Name Indication) and ALPN (Application Layer Protocol Negotiation) must be exactly the same as the browser.

Sample code: Python TLS client configuration

2. Collaborative strategy of dynamic proxy and JA4

Step 1: Pre-screening of proxy pools

Use ASN library verification (such as ipinfo.io) to keep only IPs of residential ISPs (such as Comcast, AT&T); Inject real user network noise (such as random packet loss rate of 0.1%-2%).

Step 2: Dynamic fingerprinting

Assign an independent TLS profile to each proxy IP (simulating different browsers/device models);

Use the ja4x tool to generate fingerprint hashes to ensure that they match the whitelist of the target website.

Step 3: Request link encryption

Deploy a traffic obfuscation module (such as uTLS-based protocol camouflage) on the proxy server side;

Encrypt the WebSocket transport layer to bypass man-in-the-middle sniffing (MITM).

Countermeasures and risk assessment

1. Measured data (January-February 2025)

2. Legal and risk control red lines

Compliance: Avoid collecting privacy data protected by GDPR/CCPA (such as user identity and biometric information); Countermeasures: Cloudflare has introduced JA5 fingerprinting (based on the TCP handshake mechanism), and the camouflage algorithm needs to be updated in real time.

Precautions in practical application

When applying dynamic residential proxy combined with JA4 fingerprint camouflage technology to fight against Cloudflare risk control, the following points should also be noted:

Proxy quality selection: Select high-quality and stable dynamic residential proxy services to ensure the effectiveness and anonymity of the proxy IP.

Fingerprint camouflage strategy adjustment: According to the update of the target website and Cloudflare risk control system, timely adjust the JA4 fingerprint camouflage strategy to maintain the effectiveness of the camouflage effect.

Comply with laws and regulations: During the data crawling process, it is necessary to comply with relevant laws and regulations and the terms of use of the website to avoid infringing on the privacy and rights of others.

Risk assessment and response: When using this technology, the risks that may be faced should be fully assessed, and corresponding response measures should be formulated to ensure the legality and security of data crawling activities.

Conclusion

Dynamic residential proxy combined with JA4 fingerprint camouflage technology is an effective means to fight Cloudflare 2025 risk control. By hiding the real IP address, simulating real user behavior and TLS fingerprints, we can reduce the risk of being identified by the risk control system and improve the success rate and efficiency of data crawling. However, when implementing this strategy, we also need to pay attention to issues such as the selection of agent quality, the adjustment of fingerprint disguise strategies, and compliance with laws and regulations to ensure the legality and security of data scraping activities.

0 notes

Text

Independent Web Designer Close to Me - How to Track down the Best Master for Your Site

Freelance web developer near me

In the present computerized time, organizations and people require proficient sites to lay out a web-based presence. In the event that you're looking for an independent web engineer close to me, you're probably searching for a gifted proficient who can give great web arrangements customized to your necessities. Employing an independent web engineer takes into consideration adaptability, cost-viability, and a customized way to deal with web improvement.

Why Pick an Independent Web Engineer Close to Me?

An independent web designer close to me offers a few benefits over recruiting an enormous organization:

1. Financially savvy Administrations

Specialists by and large charge lower rates contrasted with web improvement offices, settling on them a reasonable decision for new companies, private ventures, and individual undertakings.

2. Direct Correspondence

Working with a specialist kills the mediator, guaranteeing clear and direct correspondence all through the venture.

3. Customized Approach

Consultants offer altered types of assistance, fitting sites as indicated by your image personality, objectives, and ideal interest group.

4. Quicker Completion time

Specialists frequently work with less clients all at once, permitting them to finish projects more rapidly than organizations with different ventures.

Instructions to Track down the Best Independent Web Engineer Close to Me

Finding the right independent web engineer close to me requires intensive exploration. Follow these moves toward go with an educated decision:

1. Search On the web

Use web crawlers like Google and registries like Upwork, Fiverr, LinkedIn, or Specialist. Looking for "Freelance web developer near me" will yield a rundown of experts accessible in your space.

2. Survey Portfolios

Really look at the engineer's previous activities to assess their style, ability, and the nature of work. Search for experience in stages like WordPress, Shopify, custom HTML/CSS, or JavaScript structures.

3. Peruse Client Surveys and Evaluations

Client tributes give significant experiences into the specialist's dependability, relational abilities, and work quality. Check surveys on stages like Google My Business, virtual entertainment, and outsourcing sites.

4. Survey Specialized Abilities

A decent consultant ought to have mastery in front-end and back-end improvement, responsive plan, Website optimization, and security best practices. Key innovations include:

HTML, CSS, JavaScript

PHP, Python, Node.js

WordPress, Shopify, Magento

Respond, Rakish, Vue.js

5. Look at Evaluating and Bundles

Independent designers offer different evaluating models in light of involvement and task intricacy. Analyze rates, however abstain from picking the least expensive choice disregarding quality.

6. Talk about Accessibility and Backing

Guarantee the consultant can comply with time constraints and give present send off help on refreshes, bug fixes, and upkeep.

What Administrations In all actuality do Independent Web Engineers Offer?

A talented independent web engineer close to me can offer different types of assistance, including:

Custom Site Improvement - Making sites without any preparation customized to your requirements.

Internet business Site Improvement - Building on the web stores with secure installment entryways.

Site Update and Improvement - Upgrading existing sites for better execution.

Web optimization and Advanced Advertising Incorporation - Improving sites for higher web search tool rankings.

Site Upkeep and Security - Giving continuous updates and security upgrades.

Independent Web Designer versus Web Advancement Office

1. Cost

Consultants: More reasonable for independent companies and new businesses.

Offices: Greater expenses because of group the board and above costs.

2. Adaptability

Specialists: More versatile and open to custom activities.

Organizations: Follow organized work processes, which might restrict adaptability.

3. Correspondence

Consultants: Immediate and speedy reactions.

Offices: Correspondence uses different procedures, prompting likely deferrals.

Last Contemplations

Recruiting an independent web designer close to me is an incredible decision for organizations and people searching for practical, superior grade, and adaptable web improvement arrangements. Whether you really want an individual site, a business site, or a web based business stage, get some margin to explore and pick a specialist who lines up with your necessities.

Assuming you're searching for master independent web improvement administrations, look at Ajay Kumar Thakur for proficient and tweaked web arrangements. 🚀

1 note

·

View note

Text

Web scraping is one of the must acquire data science skills. Considering that the internet is vast and is growing larger every day, the demand for web scraping also has increased. The extracted data has helped many businesses in their decision making processes. Not to mention that a web scraper always has to ensure polite scraping parallelly.This article helps beginners understand XPath and CSS selectors locator strategies that are essential base aspects in scraping. A Brief Overview of The Steps in The Web Scraping ProcessOnce the locator strategies are understood with regards to the web elements, you can proceed to choose amongst the scraping technologies. As mentioned earlier, web scraping should be performed politely keeping in mind to respect the robots.txt associated with the website, ensuring that the performance of the sites is never degraded, and the crawler declares itself with who it is, and the contact info.Speaking about the scraping technologies available, scraping can be performed by coding in Python, Java, etc. For example, you could use Python-based Scrapy and Beautiful Soup, and Selenium, etc. are some of the scraping technologies that are recommended. Also, there are ready-made tools available in the market which allow you to scrape websites without having to code.Beautiful Soup is a popular web scraping library; however, it is slower as compared to Scrapy. Also, Scrapy is a much more powerful and flexible web crawler compared to Selenium and Beautiful Soup. In this article, we will be making references to Scrapy, which is an efficient web crawling framework which is written in Python. Once it is installed, the developer can extract the data from the desired web page with the help of XPath or CSS Selectors using several ways. This article particularly mentions how one can derive XPath/CSS using automated testing tools. Once this is derived, Scrapy is provided with these attribute values, and the data gets extracted.How to Explore Web Page ElementsNow, what are the elements that we see on a webpage? What needs to be selected? Try this out on your web page - Right-click on any webpage and proceed to inspect it by clicking ‘Inspect’.As a result, on the right-hand side of the page, you will be able to view the elements of that page. You can choose the element hovering tool to select the element to be inspected.And, once you select the items using that tool, you can hover over the elements to be inspected, and the corresponding HTML code is displayed on the inspection pane.CSS and XPath - How Can it be Viewed?While using Scrapy, we will need to know the CSS and XPath text to be used. The XPath is nothing but the XML web path associated with a web page element. Similarly, CSS selectors help you find or select the HTML elements that you want to style.We Can Either Derive Either By Manually - To manually derive it is cumbersome. Even if we were to use the Inspect developer tool, it is time-consuming. Hence, to support a web scraper, tools are required to help find the XPath and CSS text associated with the elements in that web page. Using Web Browser Add-ons that are available to be installed in the browser. For example, Chropath is one such Chrome browser add-on that gives information about the XPath, CSS selector, className, text, id, etc., of the desired element which is selected. Once you install it, you can view the web element’s associated extensive details once you inspect the web page.For example, using ChroPath, the XPath, CSS can be vie-wed the following way,Using web automation testing tools which have inbuilt web element locators in it. The locator gives all the info a web scraper may require to select and extract data from the web element being considered. This article explains how we can use the web automation tools can be used for extraction of the CSS and XPath.A Scrappy Example - Using Web Automation Testing Tools to Derive CSS/XpathWe will be scraping the quotes.toscrape.com web site in this article.

Note that the scrapy tutorial website also provides examples to scrape from this particular website. We will be extracting the author names and list of quotes on the website using scrapy. The web page is as follows,Launching scrapy with the URL to be scrapedTo launch up scrapy and associate it with the URL that we wish to scrape, we launch the following command, which starts up the scrapy bot.Like mentioned earlier, we have several ways to derive the XPath and CSS Selector. In this example, I have mentioned how we can use any web automation test tool that has the Web UI test recorders/and locators to derive it. For doing so, you could launch the test recorder, select the web item, and figure out the XPath and CSS associated. I have used the TestProject tool to demonstrate how the Xpath and CSS selector can be found. Once that is done, you can use the response.XPath() and response.css() commands to help query responses using XPath and CSS, respectively.Xpath derivation using web test automation tool, and then scraping.For example, now to derive the text based on the XPath, we issue the following command in scrapy, to scrape the Quotes on the website. The result is as follows:CSS derivation using web test automation tool, and then scraping.Similarly, you could also derive the CSS path by right-clicking to derive the CSS value.With that CSS value, you can pass that info as the attribute values of the response.css command as follows, and it results in the list of authors being extracted. The result is as follows:ConclusionBoth XPath and CSS are syntaxes that help to target elements within a webpage's DOM. It is a good idea to understand how XPath and CSS function internally so that you can decide which to choose amongst them. It is important to know that Xpath primarily is the language for selecting nodes in the XML docs, and CSS is a language for apply styles to the HTML document.Of course, CSS selectors perform efficiently and, faster than XPath.Thanks to the technologies we have today that easily gives us XPath and CSS details, it makes the job of a web scraper much easier.

0 notes

Text

Top 10 Open Source Web Crawling Tools To Watch Out In 2024

With technology constantly improving, smart devices and tools are becoming more common. One important aspect of this is data extraction, which is crucial for businesses today. Data is like gold on the internet, and collecting it is essential. In the past, people extracted data by hand, which was slow and difficult. Now, businesses can use modern web crawling tools to make this process easier and faster.

What Is a Web Crawling Tool?

A web crawler, sometimes called a bot, spider, or web robot, is a program that visits websites to collect information. The goal of these tools is to gather and organize data from the vast number of web pages available. By automating the data collection process, web crawlers can help you access important information quickly.

According to a report by Domo, an enormous amount of data—2.5 quintillion bytes—was created every day in 2020. With such a huge volume of data on the internet, using a web crawler can help you collect and organize this information more efficiently.

Benefits of Web Crawling Tools

Web crawling tools function like a librarian who organizes a huge library, making it easy for anyone to find the books they need. Here are some benefits of using these tools:

Monitor Competitors: If you want to succeed in your business, it’s important to keep an eye on your competitors. Best Web crawlers can help you automatically collect data from their websites, allowing you to see their strategies, pricing, and more.

Low Maintenance: Many web crawling tools require very little maintenance. This means you can save time and focus on analyzing the data rather than fixing technical issues.

High Accuracy: Accurate data is crucial for making good business decisions. Web crawling tools can improve the accuracy of the data you collect, helping you avoid mistakes that can come from manual data entry.

Time-Saving: By automating the data collection process, web crawlers can save you hours of work. This allows you to focus on more important tasks that help your business grow.

Customizable: Many web crawling tools can be tailored to fit your specific needs. Even if you don’t have a technical background, open-source tools often provide simple ways to customize how you gather data.

Scalable: As your business grows, your data needs will increase. Scalable web crawling tools can handle large volumes of data without slowing down, ensuring you get the information you need.

What Are Open Source Web Crawling Tools?

Open-source software is free for anyone to use, modify, and share. Open-source web crawling tools offer a variety of features and can save data in formats like CSV, JSON, Excel, or XML. They are known for being easy to use, secure, and cost-effective.

A survey revealed that 81% of companies adopt open-source tools primarily for cost savings. This trend is expected to grow, with the open-source services market projected to reach $30 billion by 2022.

Why Use Open Source Web Crawling Tools?

Open-source web crawling tools are flexible, affordable, and user-friendly. They require minimal resources and can complete scraping tasks efficiently. Plus, you won’t have to pay high licensing fees. Customer support is often available at no cost.

Top 10 Open Source Web Crawling Tools

There are many web crawling tools available. Here’s a list of some of the best open-source options:

ApiScrapy: Offers a range of user-friendly web crawlers built on Python. It provides 10,000 free web scrapers and a dashboard for easy data monitoring.

Apache Nutch: A highly scalable tool that allows fast data scraping. It’s great for automating your data collection.

Heritrix: Developed by the Internet Archive, this tool is known for its speed and reliability. It’s suitable for archiving large amounts of data.

MechanicalSoup: A Python library designed to automate web interactions and scraping efficiently.

0 notes

Text

Optimizing Web Crawl: Tips to Easily Improve Crawl Efficiency

Let's talk about a practical topic today - how to optimize web crawling. Whether you are a data scientist, a crawler developer, or a general web user interested in web data, I believe this article can help you.

I. Define the goal, planning first

Before you start crawling web pages, the most important step is to clarify your crawling goals. What website data do you want to crawl? What fields are needed? What is the frequency of crawling? All of these questions have to be thought through first. With a clear goal, you can develop a reasonable crawling program to avoid the waste of resources caused by blind crawling.

II.Choose the right tools and frameworks

The next step is to choose a suitable web crawling tool and framework. There are many excellent crawling tools and frameworks on the market to choose from, such as Python's Scrapy, BeautifulSoup, and Node.js Cheerio and so on. Choose a suitable tool and framework for you can greatly improve the efficiency of crawling.

III. Optimize the crawling strategy

Optimization of crawling strategy is the key to improve crawling efficiency. Here are some practical optimization suggestions:

l Concurrent Crawling: By means of multi-threaded or asynchronous requests, concurrent crawling can significantly improve the crawling speed. But pay attention to control the concurrency to avoid excessive pressure on the target website.

l de-duplication mechanism: In the process of crawling, it is inevitable to encounter duplicate data. Therefore, it is crucial to establish an effective de-duplication mechanism. Data structures such as hash tables and Bloom filters can be used to realize de-duplication.

l Intelligent Waiting: For websites that require login or captcha verification, the waiting time in the crawling process can be reduced by intelligent waiting. For example, after a successful login, wait for a few seconds before proceeding to the next step.

l Exception Handling: In the process of crawling, various exceptions may be encountered, such as network timeout, page loading failure and so on. Therefore, the establishment of a perfect exception handling mechanism can ensure the stability and reliability of the crawling process.

IV. Reasonable setting of crawling frequency

Crawling frequency settings is also a problem that needs attention. Too frequent crawling may cause pressure on the target site, and even lead to IP blocking. Therefore, when setting the crawl frequency, we should fully consider the load capacity of the target site and crawling demand. You can analyze the target site's update frequency and crawling demand to set a reasonable crawling frequency.

V. Regular Maintenance and Updates

Finally, don't forget to maintain and update your crawling system regularly. With the changes of target websites and adjustments of crawling needs, you may need to constantly optimize your crawling strategy and code. Regularly checking and updating your crawling system will ensure that it always performs well and remains stable.

By using leading proxy IP services such as 711Proxy, companies can maintain the stability and accuracy of data flow when performing web crawling, improving the effectiveness of analysis and the precision of decision-making.

0 notes

Text

How to Scrape Liquor Prices and Delivery Status From Total Wine and Store?

This tutorial is an educational resource to learn how to build a web scraping tool. It emphasizes understanding the code and its functionality rather than simply copying and pasting. It is important to note that websites may change over time, requiring adaptations to the code for continued functionality. The objective is to empower learners to customize and maintain their web scrapers as websites evolve.

We will utilize Python 3 and commonly used Python libraries to simplify the process. Additionally, we will leverage a potent and free liquor scraping tool called Selectorlib. This combination of tools will make our liquor product data scraping tasks more efficient and manageable.

List Of Data Fields

Name

Size

Price

Quantity

InStock – whether the liquor is in stock

Delivery Available: Whether the liquor is delivered

URL

Installing The Required Packages for Running Total

To Scrape liquor prices and delivery status from Total Wine and More store, we will follow these steps

To follow along with this web scraping tutorial, having Python 3 installed on your system is recommended. You can install Python 3 by following the instructions provided in the official Python documentation.

Once you have Python 3 installed, you must install two libraries: Python Requests and Selectorlib. Install these libraries using the pip3 command to scrape liquor prices and delivery data, which is the package installer for Python 3. Open your terminal or command prompt and run the following commands:

The Python Code

The Provided Code Performs The Following Actions:

Reads a list of URLs from a file called "urls.txt" containing the URLs of Total Wine and More product pages.

Utilizes a Selectorlib YAML file, "selectors.yml," to specify the data elements to scrape TotalWine.com product data.

Performs total wine product data collection by requesting the specified URLs and extracting the desired data using the Selectorlib library.

Stores the scraped data in a CSV spreadsheet named "data.csv."

Create The YAML File "Selectors.Yml"

We utilized a file called "selectors.yml" to specify the data elements we wanted to extract total wine product data. Create the file using a web scraping tool called Selectorlib.

Selectorlib is a powerful tool that simplifies selecting, highlighting up, and extracting data from web pages. With the Chrome Extension of Selectorlib Web Crawler, you can easily mark the data you need to collect and generate the corresponding CSS selectors or XPaths.

Selectorlib can make the data extraction process more visual and intuitive, allowing us to focus on the specific data elements we want to extract without manually writing complex CSS selectors.

To leverage Selectorlib, you can install the Chrome Extension of Selectorlib Web crawler and use it to mark and extract the desired data from web pages. The tool will then develop the imoportant CSS selectors or XPaths, which can be saved in a YAML file like "selectors.yml" and used in your Python code for efficient data extraction.

Functioning of Total Wine and More Scraper

To specify the URLs you want to scrape, create a text file named as "urls.txt" in the same directory as your Python script. Inside the "urls.txt" file, add the URLs you need to scrape liquor product data , each on a new line. For example:

Run the Total Wine data scraper with the following command:

Common Challenges And Limitations Of Self-Service Web Scraping Tools And Copied Internet Scripts

Unmaintained code and scripts pose significant pitfalls as they deteriorate over time and become incompatible with website changes. Regular maintenance and updates maintain the functionality and reliability of these code snippets. Websites undergo continuous updates and modifications, which can render existing code ineffective or even break it entirely. It is essential to prioritize regular maintenance to ensure long-term functionality and reliability, enabling the code to adapt to evolving website structures and maintain its intended purpose. By staying proactive and keeping code up-to-date, developers can mitigate issues and ensure the continued effectiveness of their scripts.

Here are some common issues that can arise when using unmaintained tools:

Changing CSS Selectors: If the website's structure changes, the CSS selectors are used to extract data, such as the "Price" selector in the selectors.yaml file may become outdated or ineffective. Regular updates are needed to adapt to these changes and ensure accurate data extraction.

Location Selection Complexity: Websites may require additional variables or methods to select the user's "local" store beyond relying solely on geolocated IP addresses. Please handle this complexity in the code to avoid difficulties retrieving location-specific data.

Addition or Modification of Data Points: Websites often introduce new data points or modify existing ones, which can impact the code's ability to extract the desired information. Without regular maintenance, the code may miss out on essential data or attempt to extract outdated information.

User Agent Blocking: Websites may block specific user agents to prevent automated scraping. If the code uses a blocked user agent, it may encounter restrictions or deny website access.

Access Pattern Blocking: Websites employ security measures to detect and block scraping activities based on access patterns. If the code follows a predictable scraping pattern, it can trigger these measures and face difficulties accessing the desired data.

IP Address Blocking: Websites may block specific IP addresses or entire IP ranges to prevent scraping activities. If the code's IP address or the IP addresses provided by the proxy provider are blocked, it can lead to restricted or denied access to the website.

Conclusion: Utilizing a full-service solution, you can delve deeper into data analysis and leverage it to monitor the prices and brands of your favorite wines. It allows for more comprehensive insights and enables you to make informed decisions based on accurate and up-to-date information.

At Product Data Scrape, we ensure that our Competitor Price Monitoring Services and Mobile App Data Scraping maintain the highest standards of business ethics and lead all operations. We have multiple offices around the world to fulfill our customers' requirements.

#WebScrapingLiquorPricesData#ScrapeTotalWineProductData#TotalWineDataScraper#ScrapeLiquorPricesData#ExtractTotalWineProductData#ScrapeLiquorDeliveryData#LiquorDataScraping

0 notes

Text

Amazon Best Seller: Top 7 Tools To Scrape Data From Amazon

In the realm of e-commerce, data reigns supreme. The ability to gather and analyze data is key to understanding market trends, consumer behavior, and gaining a competitive edge. Amazon, being the e-commerce giant it is, holds a treasure trove of valuable data that businesses can leverage for insights and decision-making. However, manually extracting this data can be a daunting task, which is where web scraping tools come into play. Here, we unveil the top seven tools to scrape data from Amazon efficiently and effectively.

Scrapy: As one of the most powerful and flexible web scraping frameworks, Scrapy offers robust features for extracting data from websites, including Amazon. Its modular design and extensive documentation make it a favorite among developers for building scalable web crawlers. With Scrapy, you can navigate through Amazon's pages, extract product details, reviews, prices, and more with ease.

Octoparse: Ideal for non-programmers, Octoparse provides a user-friendly interface for creating web scraping workflows. Its point-and-click operation allows users to easily set up tasks to extract data from Amazon without writing a single line of code. Whether you need to scrape product listings, images, or seller information, Octoparse simplifies the process with its intuitive visual operation.

ParseHub: Another user-friendly web scraping tool, ParseHub, empowers users to turn any website, including Amazon, into structured data. Its advanced features, such as the ability to handle JavaScript-heavy sites and pagination, make it well-suited for scraping complex web pages. ParseHub's point-and-click interface and automatic data extraction make it a valuable asset for businesses looking to gather insights from Amazon.

Beautiful Soup: For Python enthusiasts, Beautiful Soup is a popular choice for parsing HTML and XML documents. Combined with Python's requests library, Beautiful Soup enables developers to scrape data from Amazon with ease. Its simplicity and flexibility make it an excellent choice for extracting specific information, such as product titles, descriptions, and prices, from Amazon's web pages.

Apify: As a cloud-based platform for web scraping and automation, Apify offers a convenient solution for extracting data from Amazon at scale. With its ready-made scrapers called "actors," Apify simplifies the process of scraping Amazon's product listings, reviews, and other valuable information. Moreover, Apify's scheduling and monitoring features make it easy to keep your data up-to-date with Amazon's ever-changing content.

WebHarvy: Specifically designed for scraping data from web pages, WebHarvy excels at extracting structured data from Amazon and other e-commerce sites. Its point-and-click interface allows users to create scraping tasks effortlessly, even for dynamic websites like Amazon. Whether you need to scrape product details, images, or prices, WebHarvy provides a straightforward solution for extracting data in various formats.

Mechanical Turk: Unlike the other tools mentioned, Mechanical Turk takes a different approach to data extraction by leveraging human intelligence. Powered by Amazon's crowdsourcing platform, Mechanical Turk allows businesses to outsource repetitive tasks, such as data scraping and data validation, to a distributed workforce. While it may not be as automated as other tools, Mechanical Turk offers unparalleled flexibility and accuracy in handling complex data extraction tasks from Amazon.

In conclusion, the ability to scrape data from Amazon is essential for businesses looking to gain insights into market trends, competitor strategies, and consumer behavior. With the right tools at your disposal, such as Scrapy, Octoparse, ParseHub, Beautiful Soup, Apify, WebHarvy, and Mechanical Turk, you can extract valuable data from Amazon efficiently and effectively. Whether you're a developer, data analyst, or business owner, these tools empower you to unlock the wealth of information that Amazon has to offer, giving you a competitive edge in the ever-evolving e-commerce landscape.

0 notes

Text

Building Blocks of a Website: A Simple Guide to Website Development

Although starting a website may seem like a difficult undertaking, have no fear! In this blog article, we'll simplify and make it easier to grasp the website-building process. Let's examine the fundamentals of making your website work, regardless of your level of experience or whether you need a refresher.

1. Define Your Purpose and Audience:

It's important to decide who your target audience is and what your website's goal is before getting too technical. Are you making a portfolio, a blog, or an online store? Making decisions on design and development will be guided by your goals.

2. Choose a domain name and hosting:

The first step in creating a website is choosing a hosting company and a domain name, or web address. To make sure that people can reach your website, pick a domain name that accurately describes your brand or content and a reputable hosting provider.

3. Plan Your Website Structure:

Consider your website to be a book. Make a plan for the primary sections (pages) and their arrangement. Home, About Us, Services and Products, and Contact are examples of common pages. This planning stage helps in creating a user-friendly navigation structure.

4. Website Design:

Let's now work on creating a visually pleasing website. Either use website builders and templates, or engage a designer. Look for fonts, color schemes, and imagery that complement your brand and draw in customers.

5. Front-end coding (JavaScript, HTML, CSS):

What users view and work with is known as the front end. Your material is structured using HTML (Hypertext Markup Language), styled using CSS (Cascading Style Sheets), and interactive with JavaScript. Frameworks such as React or Vue. JS are widely used in current websites to simplify front-end development.

6. Create the server, database, and server-side language on the back end:

Your website's functionality is powered by the backend. This entails configuring a server, selecting a database (such as MySQL or MongoDB), and handling data and background tasks with server-side languages (such as Node.js, Python, or PHP).

7. Integrate Functionality (APIs, Plugins):

Use APIs (application programming interfaces) or plugins if your website requires additional functionality, such as payment gateways or social network integration. These tools make it possible for your website to easily communicate with outside services.

8. Test Your Website:

Test your website thoroughly before launching to identify any faults or difficulties. Verify that all of the features function as expected by checking how responsive it is across a range of browsers and devices. Your website's functionality is powered by the backend. This entails configuring a server, selecting a database (such as MySQL or MongoDB), and handling data and background tasks with server-side languages (such as Node.js, Python, or PHP).

9. Search Engine Optimization (SEO):

By making your website search engine-friendly, you may increase its visibility. This entails making sure your material is easily available to search engine crawlers, employing relevant keywords, and developing descriptive meta tags.

10. Go Live with Your Website:

Best wishes! You're prepared to take off! After you link your domain to your hosting, your website will go live and be available to everyone.

Planning, designing, coding, and testing are all steps in the methodical process of creating a website. With the correct strategy and a little imagination, you can make a website that looks amazing and accomplishes its goals well. Website building is an exciting process, whether your goals are to sell things, share your opinions with the world, or showcase your work.

The Full Stack Developer course in Hyderabad is a great place to start if you're interested in learning more about full-stack developers because it provides opportunities for certification and job placement. Skilled educators can improve your learning. These services are available both offline and online. Take it step by step, and if you're interested, think about signing up for a course.

Thank you for spending your valuable time, and have a great day.

0 notes

Text

From Pixels to Python: Google Snake Game's Tech Transformation

In the huge scene of the web, Google has been a steady trailblazer, in search innovation as well as in giving engaging and intelligent encounters to its clients. One such unlikely treasure that has enraptured millions is the Google Snake Game. A basic yet habit-forming game, it has developed throughout the long term, leaving a path of sentimentality and innovative achievements. In this tech time case, we will travel through the advancement of Google's Snake Game, investigating its beginnings, improvement, and the effect it has had on the manner in which we see intuitive substance.

I. The Introduction of Google's Snake Game

A. Beginning of Google Hidden little treats

Google has a custom of consolidating Hidden treats - stowed away elements or shocks - in its items, filling in as great revelations for clients. The idea of a snake game traces all the way back to the beginning of cell phones, where Nokia's Snake turned into a sensation. Google, consistently excited about giving a dash of eccentricity, chose to make its rendition.

B. Google Search Hidden little goodies

The primary emphasis of Google's Snake Game showed up as a Hidden treat in the web crawler itself. Clients could get to the game by composing "play snake game" into the hunt bar, setting off a playable variant of the exemplary Snake Game. This basic yet shrewd mix permitted clients to enjoy a speedy gaming meeting without leaving the natural Google climate.

II. The Internet and Versatile Reconciliation

A. Chrome Program Augmentation

As the ubiquity of the game developed, Google chose to make it a stride further. The presentation of the Google Snake Game Chrome augmentation permitted clients to play the game straightforwardly in their programs. This expansion made the game more open, as clients presently not expected to start an inquiry to partake in a series of Snake.

B. Portable Reconciliation and Openness

Perceiving the rising predominance of cell phones, Google upgraded its Snake Game for portable stages. Clients could now play the game on their cell phones by basically looking for "play snake game" on the Google application. The touch-accommodating controls made it a consistent encounter, and the game's accessibility on both Android and iOS stages expanded its span.

III. Mechanical Progressions and Developments

A. Artificial intelligence Combination

Google has forever been at the very front of man-made consciousness, and it didn't pass up on the chance to inject Snake Game with a bit of computer based intelligence. The game's man-made intelligence mode presented another aspect, where the snake's developments were not exclusively subject to client input. All things being equal, the snake exhibited a level of independent direction, making the gaming experience seriously testing and dynamic.

B. Computer generated Reality (VR) Snake Game

As VR innovation picked up speed, Google adjusted its Snake Game to offer a computer generated simulation experience. Clients could submerge themselves in the game, encountering Snake in a three-layered space. This development not just displayed Google's obligation to remaining at the front line of innovation yet in addition alluded to the capability of mixing exemplary games with state of the art progressions.

IV. Coordinated efforts and Extraordinary Releases

A. Cooperative Endeavors

Google's Snake Game didn't stay restricted to its unique structure. Joint efforts with different brands and establishments achieved extraordinary versions, presenting themed components and special difficulties. From film connections to occasional coordinated efforts, these exceptional releases kept the game new and drawing in, taking special care of a different crowd.

B. Google Doodles

The game found a characteristic home in Google Doodles - the imaginative and intelligent modifications to the Google logo on the web search tool's landing page. Snake Game-themed Google Doodles praised achievements, commemorations, and in some cases, simply the delight of gaming itself. These doodles transformed the web search tool into an impermanent gaming center, mixing sentimentality with current plan.

V. The Social Effect

A. Wistfulness and Nostalgic Worth

Google Snake Game, in the entirety of its emphases, conveys major areas of strength for an of wistfulness. For the people who grew up with early cell phones, the game fills in as a scaffold between the past and the present. Its effortlessness and commonality summon a feeling of solace, making it an immortal piece of computerized diversion.

B. Social Association and Rivalry

The game's mix with social stages added a layer of seriousness. Clients could share their high scores, challenge companions, and take part in agreeable rivalries. This social viewpoint changed the lone demonstration of playing a game into a common encounter, cultivating a feeling of local area among players around the world.

VI. Difficulties and Reactions

A. Dullness and Monotony

In spite of its getting through fame, Google's Snake Game has confronted analysis for its apparent tedium and dullness. Some contend that the game's straightforwardness, while beguiling, limits its drawn out offer. The test for Google has been to adjust the game's nostalgic fascinate with developments that keep it drawing in for a wide crowd.

B. Innovative Impediments

The development of Google's Snake Game has been formed by innovative headways. Nonetheless, the game's capacity to use state of the art innovations has been compelled by the need to keep up with openness across a large number of gadgets and stages. Finding some kind of harmony has introduced the two open doors and difficulties.

VII. The Eventual fate of Google's Snake Game

A. Ceaseless Cycles and Updates

Google's obligation to furnishing clients with a superb and intelligent experience recommends that the Snake Game will keep on developing. Standard updates, new elements, and maybe startling joint efforts could keep the game important and interesting to people in the future.

B. Incorporation with Arising Innovations

As innovation keeps on propelling, Google's Snake Game could investigate further incorporation with arising advances like increased reality (AR) and expanded reality (XR). These developments could change the game into a more vivid and intuitive experience, pushing the limits of what an exemplary game can accomplish in the computerized age.

VIII. Conclusion: An Immortal Exemplary in the Computerized Time

In conclusion, the development of Google's Snake Game is a demonstration of the getting through allure of basic yet captivating diversion. From its modest starting points as a secret Hidden treat to its boundless accessibility across programs, cell phones, and, surprisingly, computer generated reality, the game has adjusted to the changing mechanical scene while holding its nostalgic appeal.

As we glance back at the excursion of Google's Snake Game, obviously its effect stretches out past simple amusement. It addresses an extension between the simple and computerized periods, an association between ages who have seen the development of innovation. The game's capacity to adjust, team up, and keep up with social significance positions it as an immortal exemplary in the steadily developing scene of computerized diversion. Whether you play it briefly of sentimentality or find it interestingly, Google's Snake Game keeps on crawling its direction into the hearts of clients all over the planet, departing a perky path in the immense spread of the web.

0 notes

Text

Python Development Unleashed: SEO-Friendly Practices for Success

In the ever-evolving landscape of web development, Python has emerged as a powerful and versatile programming language. Its simplicity, readability, and extensive ecosystem make it a top choice for developers worldwide. As businesses strive to enhance their online presence, search engine optimization (SEO) becomes a crucial factor. In this article, we'll explore how Python programming development can be unleashed with SEO-friendly practices to ensure online success.

1. The Python Advantage in SEO

Python's readability and clean syntax provide a solid foundation for writing SEO-friendly code. Search engines prioritize well-structured and easily understandable content, and Python's simplicity makes it easier for developers to create clean code that search engine crawlers can navigate efficiently.

2. Embrace Frameworks for SEO Boost

Choosing the right framework can significantly impact your website's SEO performance. Django and Flask are popular Python web frameworks that offer powerful features and follow best practices for SEO. Django, for example, comes with built-in tools for handling URLs, sitemaps, and other SEO-related functionalities, while Flask provides flexibility for developers to implement SEO-friendly practices tailored to their specific needs.

3. Optimize URL Structures for SEO

A well-optimized URL structure is a fundamental aspect of SEO. Python web frameworks often include features that facilitate the creation of clean and meaningful URLs. Developers can leverage these features to structure URLs that are both user-friendly and optimized for search engines. Avoid long and convoluted URLs, and instead, opt for short, descriptive ones that include relevant keywords.

4. Content is King: Leverage Python for Dynamic Content Generation